(No relation to that Inspector guy…) Detector Gadget is an eDiscovery and digital forensics analysis tool that leverages bulk_extractor to identify and extract features from digital evidence. Built with a containerized architecture, it provides a web interface for submitting, processing, and visualizing forensic analysis data.

As legal proceedings increasingly rely on digital evidence, organizations require sophisticated tools that can dissect massive data stores quickly and accurately. Enter Detector Gadget (no relation to that famous Inspector), an eDiscovery and digital forensics solution built to streamline the discovery process. By leveraging the powerful capabilities of bulk_extractor, Detector Gadget automates feature identification and extraction from digital evidence, reducing the time and complexity associated with traditional forensic methods.

Challenges in eDiscovery and Digital Forensics

The sheer volume and diversity of modern digital data create major hurdles for investigators, legal professionals, and security teams. Conventional forensic tools often struggle to handle large-scale analyses, making quick identification of relevant data a painstaking task. Additionally, it can be difficult to coordinate among multiple stakeholders—attorneys, forensic analysts, and IT teams—when the technology stack is fragmented.

Detector Gadget was developed to tackle these challenges head-on. By focusing on containerized deployment, a unified web interface, and automated data pipelines, it offers a holistic solution for eDiscovery and forensic analysis.

Containerized Architecture for Scalability and Reliability

One of Detector Gadget’s core design decisions is its containerized architecture. Each aspect of the platform—data ingestion, processing, and reporting—runs within its own container. This modular approach provides several benefits:

• Scalability: You can easily spin up or down additional containers to handle fluctuating workloads, ensuring the system meets the demands of any size or type of investigation.

• Portability: Containerization makes Detector Gadget simple to deploy in various environments, whether in on-premise servers or on cloud-based infrastructure such as AWS or Azure.

• Security and Isolation: By running processes in isolated containers, any vulnerabilities or misconfigurations are less likely to affect the overall system.

Bulk_extractor at the Core

Detector Gadget’s forensic engine is powered by bulk_extractor, a widely used command-line tool known for its ability to detect and extract multiple types of digital artifacts. Whether it’s credit card numbers, email headers, or other sensitive data hidden within disk images, bulk_extractor systematically scans and indexes vital information. This eliminates the guesswork in searching for specific data types and helps investigators home in on the exact evidence relevant to an inquiry.

The Web Interface: Streamlined Submission and Visualization

A standout feature of Detector Gadget is its intuitive web interface. Rather than grappling with command-line operations, investigators and eDiscovery professionals can:

• Submit Evidence: Upload disk images, file snapshots, or directory contents via a simple drag-and-drop interface.

• Configure Analysis: Select from various scanning options and data filters, customizing the bulk_extractor engine to focus on particular file types, geographical metadata, or communications records.

• Monitor Progress: Watch in real time as the system processes large data sets, providing rough time estimates and resource utilization metrics.

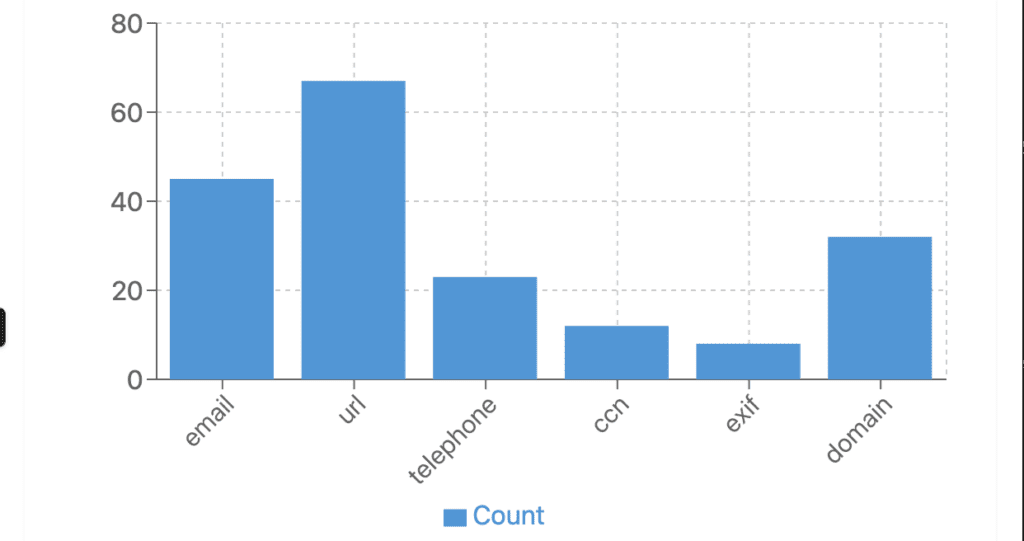

• View Results: Detector Gadget’s dashboards show interactive charts and graphs that visualize extracted features, from keyword hits to identified email addresses or financial details—making it easier to pinpoint patterns in the data.

Secure Collaboration and Audit Trails

In eDiscovery or digital forensics, it’s vital to maintain an irrefutable chain of custody and accurate tracking of user actions. Detector Gadget implements robust user authentication and role-based access controls, ensuring that only authorized personnel can perform specific tasks. Every action—from uploading evidence to exporting reports—is logged for audit trail purposes, satisfying compliance standards and safeguarding the integrity of the investigation.

Deployment and Integration

Detector Gadget also supports smooth integration with existing legal document management systems, case management platforms, and investigative workflows. The containerized design, combined with RESTful APIs, allows organizations to connect Detector Gadget’s findings with other collaboration and storage solutions. Whether you’re archiving analysis reports or triggering a deeper look into suspicious artifacts, the flexible architecture supports a wide range of custom integrations.

PROTOYPING TIME: 30m(ish)

Features

- Digital Forensics Analysis: Extract emails, credit card numbers, URLs, and more from digital evidence

- Web-based Interface: Simple dashboard for job submission and results visualization

- Data Visualization: Interactive charts and graphs for analysis results

- Asynchronous Processing: Background job processing with Celery

- Containerized Architecture: Kali Linux container for bulk_extractor and Python container for the web application

- Report Generation: Automated generation and delivery of analysis reports

- RESTful API: JSON API endpoints for programmatic access

Architecture

Detector Gadget consists of several containerized services:

- Web Application (Flask): Handles user authentication, job submission, and results display

- Background Worker (Celery): Processes analysis jobs asynchronously

- Bulk Extractor (Kali Linux): Performs the actual forensic analysis

- Database (PostgreSQL): Stores users, jobs, and extracted features

- Message Broker (Redis): Facilitates communication between web app and workers

Getting Started

Prerequisites

- Docker and Docker Compose

- Git

Installation

- Clone the repository

git clone https://github.com/yourusername/detector-gadget.git

cd detector-gadget- Start the services

docker-compose up -d- Initialize the database

curl http://localhost:5000/init_db- Access the application Open your browser and navigate to http://localhost:5000 Default admin credentials:

- Username:

admin - Password:

admin

Usage

Submitting a Job

- Log in to the application

- Navigate to “Submit Job”

- Upload a file or provide a URL to analyze

- Specify an output destination (email or S3 URL)

- Submit the job

Viewing Results

- Navigate to “Dashboard” to see all jobs

- Click on a job to view detailed results

- Explore the visualizations and extracted features

Development

Running Tests

# Install test dependencies

gem install rspec httparty rack-test

# Run tests against a running application

rake test

# Or run tests in Docker

rake docker_testProject Structure

detector-gadget/

├── Dockerfile.kali # Kali Linux with bulk_extractor

├── Dockerfile.python # Python application

├── README.md # This file

├── Rakefile # Test tasks

├── app.py # Main Flask application

├── celery_init.py # Celery initialization

├── docker-compose.yml # Service orchestration

├── entrypoint.sh # Container entrypoint

├── requirements.txt # Python dependencies

├── spec/ # RSpec tests

│ ├── app_spec.rb # API tests

│ ├── fixtures/ # Test fixtures

│ └── spec_helper.rb # Test configuration

├── templates/ # HTML templates

│ ├── dashboard.html # Dashboard view

│ ├── job_details.html # Job details view

│ ├── login.html # Login form

│ ├── register.html # Registration form

│ └── submit_job.html # Job submission form

└── utils.py # Utility functions and tasksCustomization

Adding New Feature Extractors

Modify the process_job function in utils.py to add new extraction capabilities:

def process_job(job_id, file_path_or_url):

# ...existing code...

# Add custom bulk_extractor parameters

client.containers.run(

'bulk_extractor_image',

command=f'-o /output -e email -e url -e ccn -YOUR_NEW_SCANNER /input/file',

volumes={

file_path: {'bind': '/input/file', 'mode': 'ro'},

output_dir: {'bind': '/output', 'mode': 'rw'}

},

remove=True

)

# ...existing code...Configuring Email Delivery

Set these environment variables in docker-compose.yml:

environment:

- SMTP_HOST=smtp.your-provider.com

- SMTP_PORT=587

- SMTP_USER=your-username

- SMTP_PASS=your-password

- SMTP_FROM=noreply@your-domain.comProduction Deployment

For production environments:

- Update secrets:

- Generate a strong

SECRET_KEY - Change default database credentials

- Use environment variables for sensitive information

- Configure TLS/SSL:

- Set up a reverse proxy (Nginx, Traefik)

- Configure SSL certificates

- Backups:

- Set up regular database backups

- Monitoring:

- Implement monitoring for application health

Security Considerations

- All user-supplied files are processed in isolated containers

- Passwords are securely hashed with Werkzeug’s password hashing

- Protected routes require authentication

- Input validation is performed on all user inputs

Future Development

- User roles and permissions

- Advanced search capabilities

- PDF report generation

- Timeline visualization

- Case management

- Additional forensic tools

Troubleshooting

Common Issues

Bulk Extractor container fails to start

# Check container logs

docker logs detector-gadget_bulk_extractor_1

# Rebuild the container

docker-compose build --no-cache bulk_extractorDatabase connection issues

# Ensure PostgreSQL is running

docker-compose ps db

# Check connection parameters

docker-compose exec web env | grep DATABASE_URL